We have a new short 60 second video explaining the use of machine learning with beacon sensor data (best viewed full screen):

Read about AI Machine Learning with Beacons

iBeacon, Eddystone, Bluetooth, IoT sensor beacons, apps, platforms

We have a new short 60 second video explaining the use of machine learning with beacon sensor data (best viewed full screen):

Read about AI Machine Learning with Beacons

When working with Machine Learning on beacon sensor data or indeed any data, it’s important to realise AI machine learning isn’t magic. It isn’t foolproof and is ultimately only as good as the data passed in. Because it’s called AI and machine learning, people often expect 100% accuracy when this often isn’t possible.

By way of a simple example, take a look at the recent tweet by Max Woolf where he shows a video depicting the results of the Google cloud vision API when asked to identify an ambiguous rotating image that looks like a duck and rabbit:

There are times when it thinks the image is a duck, other times a rabbit and other times when it doesn’t identify either. Had the original learning data included only ducks but no rabbits there would have been different results. Had there been different images of ducks the results would have been different. Machine learning is only a complex form of pattern recognition. The accuracy of what you get out is related to a) The quality of the learning data and b) The quality of the tested data when to try identification.

If your application of machine learning is safety critical and needs 100% accuracy, then machine learning might not be right for you.

Read about AI Machine Learning with Beacons

AI machine learning is a great partner for sensor beacon data because it allows you to make sense of data that’s often complex and contains noise. Instead of difficult traditional filtering and algorithmic analysis of the data you train a model using existing data. The model is then used to detect, classify and predict. When training the model, machine learning can pick up on nuances of the data that a human programmer wouldn’t see by analysing the data.

One of the problems with the AI machine learning approach is that you use the resultant model but can’t look inside to see how it works. You can’t say why the model has classified something some way or why it has predicted something. This can make it difficult for us humans to trust the output or understand what the model was ‘thinking’ when the classifications or predictions end up being incorrect. It also makes it impossible to provide rationales in situations such as ‘right to know’ legislation or causation auditing.

A new way to solve this problem is use of what are known as counterfactuals. Every model has inputs, in our case sensor beacon data and perhaps additional contextual data. It’s possible to apply different values to inputs to find tipping points in the model. A simple example from acceleration xyz sensor data might be that a ‘falling’ indicator is based on z going over a certain value. Counterfactuals are generic statements that explain not how the model works but how it behaves. Recently, Google announced their What-If tool that can be used to derive such insights from TensorFlow models.

Read about Machine Learning and Beacons

There’s an article in The Manufacturer magazine on “Manufacturing:the numbers” that highlights some numbers from the Hennik Research’s Annual Manufacturing Report.

![]()

In practice, we are finding many organisations are struggling to develop skills, business processes and organisational willpower to implement 4IR. There’s a relatively slow pace in many industries, driven down by the uncertainties of Brexit, Europe and International trading tensions.

Nevertheless, we believe that once these political issues start to play out, the more forward-thinking manufacturers will realise they have to revolutionise their processes in order to compete in an market with complex labour availability and tighter margins due to tariffs. Manufacturers that are able to harness 4IR effectively will be the ones that will be able to differentiate themselves, while the laggards will find themselves more and more at a disadvantage.

Read about Sensing for Industry and IoT

Read about Machine Learning

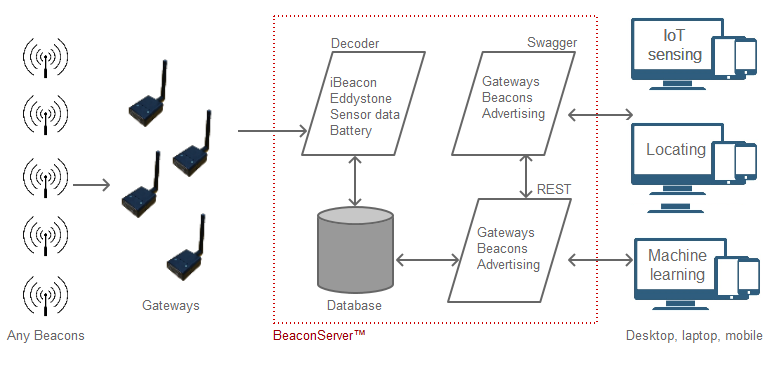

For a while now, we have had enquiries from companies interested in our BeaconRTLS but not wanting the whole thing. In some scenarios such as IoT, machine learning and even locating you just want to collect data and not visualise it on maps/plans. Also, our BeaconRTLS™ was found to be overkill for small scale projects that don’t need the extremely high throughput.

Today, we have released BeaconServer™. It’s a ready-made system to collect multi-location beacon advertising data and make it available to other people, systems and apps. It allows you to collect, save and query beacon data without any coding.

BeaconServer™ comes in the form of a self install. Please see the BeaconServer web site for more information.

Benedict Evans of Andreessen Horowitz, a venture capital firm in Silicon Valley, has a thought-provoking blog post on Ways to Think About Machine Learning.

Benedict asks what new things machine learning (ML) could enable. What important problems might it actually be able to solve? There are (too) many examples of machine learning being used to analyse images, audio and text, usually using the same example data. However, the main question for organisations is how can they use ML? What should they look for in data? What can be done?

Much of the emphasis is currently on making use of existing captured data. However, such data is often trapped in siloed company departments and usually needs copious amounts of pre-processing to make it suitable for machine learning.

We believe some easier-to-exploit and more profound opportunities exist if you use new data from sensors attached to physical things to create new data. Data from physical things can provide deeper insights than existing company administrative data. The data can also be captured in more suitable formats and can be shared rather than stored by protectionist company departments.

For example, let’s take movement xyz that’s just one aspect of movement that can be detected by beacons. Machine learning allows use of accelerometer xyz motor vibration to predict the motor is about to fail. Human posture, recorded as xyz allows detection that patients are overly-wobbly and might be due for a fall. The same human posture information can be used to classify sports moves and fine tune player movement. xyz from a vehicle can be used to classify how well a person is driving and hence allow insurers provide behavioural based insurance. xyz from human movement might even allow that movement to uniquely identify a person and be used as a form of identification. The possibilities and opportunities are extensive.

As previously mentioned, the above examples are just one aspect of movement. If you also consider movement between zones, movement from stationary and fall detection itself, more usecases become evident. Sensor beacons also allow measuring of temperature, humidity, air pressure, light and magnetism (hall effect), proximity and heart rate. There are so many possibilities it can seem difficult to know where to start.

One solution is to look at your business rather than technical solutions or even machine learning. Don’t expect or look for a ready-made solution or product as the most appropriate machine learning solutions will usually need be custom and proprietary to your company. Start by looking for aspects of your business that are currently very costly or very risky. How might more ‘intelligence’ be used to cut these costs or reduce these risks?

Practical examples are How might we use less fuel? How might we use less people? How might we concentrate on the types of work that are least risky? How might be preempt costly or risky situations? How might we predict stoppages or over-runs?

Next, use your organisation domain experts to assess what data might be needed to measure data associated with these situations. Humans often have insight that patterns in particular data types will help classify and predict situations. They just can work out the patterns. That’s where machine learning excels.

A growing use of sensor beacons is in prognostics. Prognostics replaces human inspection with continuously automated monitoring. This cuts costs and potentially detects when things are about to fail rather than when they have failed. This makes processes proactive rather than reactive thus providing for smoother process planning and reducing the knock-on affects of failures. It can also reduce the need for over excessive and costly component replacement that’s sometimes used to reduce in-process failure.

Prognostics is implemented by examining the time series data from sensors, such as those monitoring temperature or vibration, in order to detect anomalies and make forecasts on the remaining useful life of components. The problems with analysing such data values are that they are usually complex and noisy.

Machine learning’s capacity to analyse very large amounts of high dimensional data can take prognostics to a new level. In some circumstances, adding in additional data such as audio and image data can enhance the capabilities and provide for continuously self-learning systems.

A downside of using machine learning is that it requires lots of data. This usually requires a gateway, smartphone, tablet or IoT Edge device to collect initial data. Once the data has been obtained, it need to be categorised, filtered and converted into a form suitable for machine learning. The machine learning results in a ‘model’ that can be used in production systems to provide for classification and prediction.

Mobile World Live, the media arm of the GSMA, has a new article titled IoT data impossible to use without AI.

The article title is over-dramatic because IoT data can be used without AI. However, as the article goes on to say, AI is …

‘vital to unlocking the “true potential” of IoT’

… that has more truth.

As usual, these things are said with no example or context. Let’s look at a simple example.

Let’s say we want to use x y z accelerometer data from one of our sensor beacons to measure a person’s movement. If we wanted to know if the person is falling we could test for limits on the x y z. This doesn’t use AI. Now consider if we want to know if person is walking, standing, running, lying down (their ‘posture’). You can look at the data forever looking for right patterns of data. Even if you found a pattern, it probably wouldn’t work with a different person. AI machine learning provides a solution. A simplistic explanation is that it can take recordings of x y z of these postures from multiple people and create a model. This model can then be used with new data to classify the posture.

AI solves problems that previously seemed too complex and impossible to solve by humans. Solving such problems often improves efficiency, saves costs, increases competitiveness and can even create new intellectual property for your organisation.

However, don’t automatically turn to AI to make sense of sensor data. Don’t over-complicate things if the data can be analysed using conventional programming.

As mentioned yesterday, in the Mr Beacon interview with Ajay Malik, beacon positioning and sensor data will be increasingly used as input for AI machine learning (ML). Beacons are a great way of providing the large amounts of data required of ML.

At BeaconZone, we have recently started using beacon data in machine learning. This has been a natural progression of use of our BeaconRTLS to collect large data sets. We have a new subsidiary Sensor Cognition that provides services to extract and use intelligence from sensor data. Unlike most other ML companies, we aren’t interested in computer vision, speech and language recognition, even though the first two could be inferred to be from sensor data. Instead, we specialise in extracting intelligence from industry time series position and sensor data.

Engage with us to receive free information on how to get started. Discover how to jump start solutions for your organisation’s unique challenges.

BeaconZone founder, Simon Judge has a new article on LinkedIn on Discovering The Value of Data.

Take a fresh look at the data created by beacons and how this can lead to insights generated via AI machine learning.