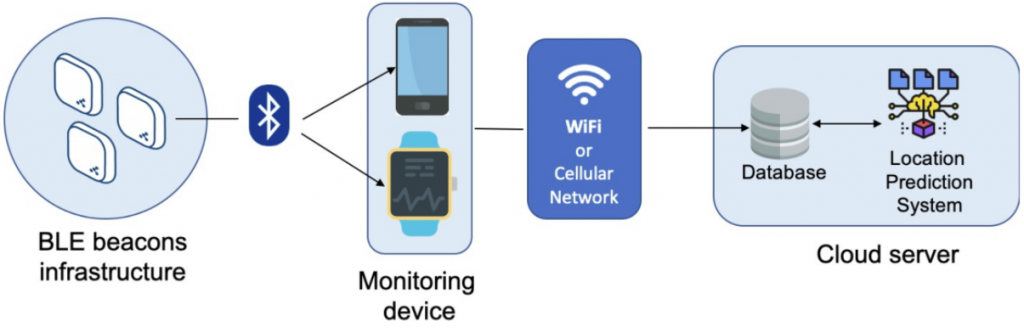

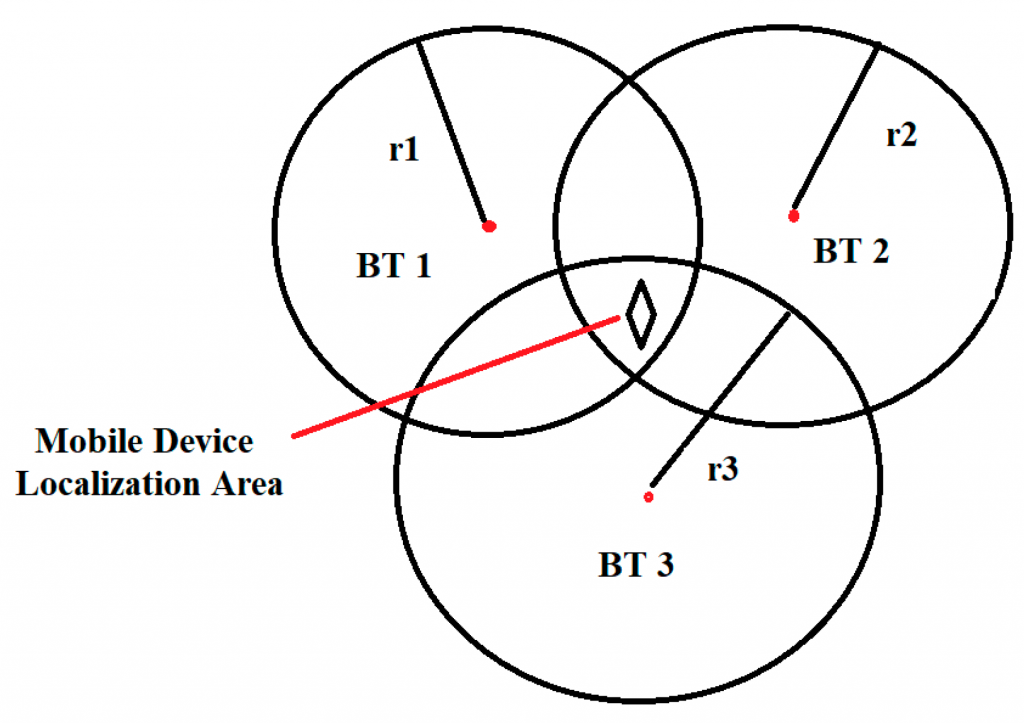

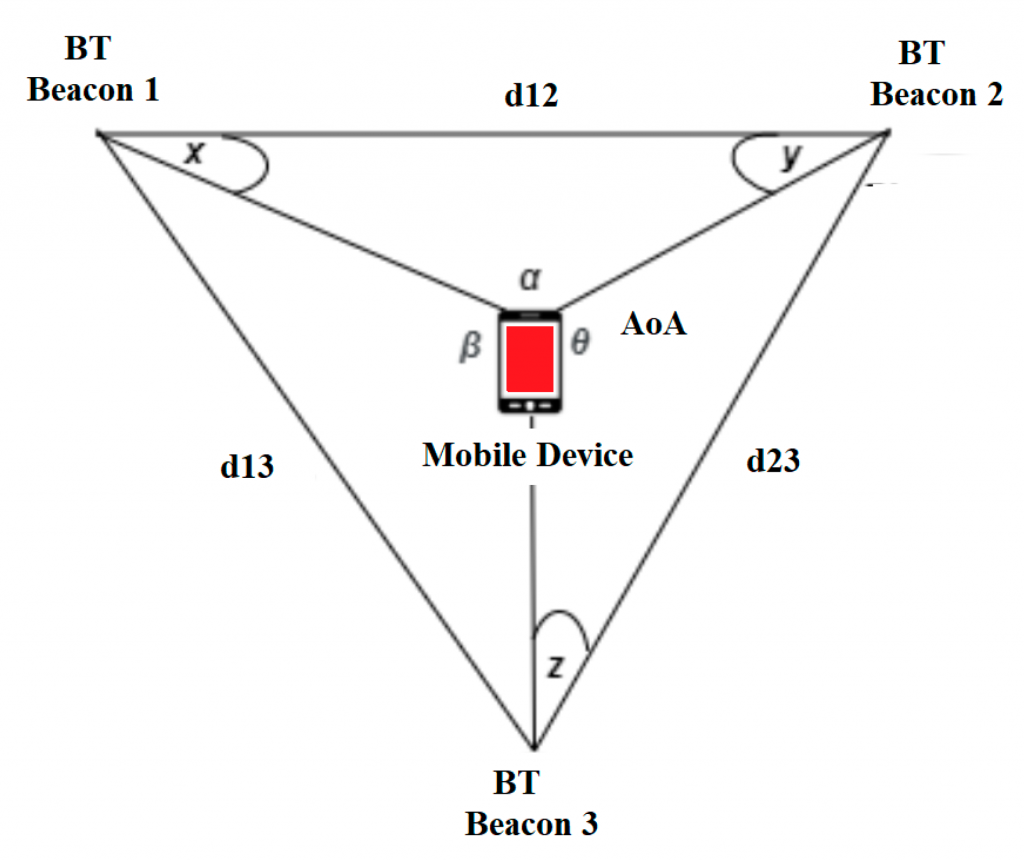

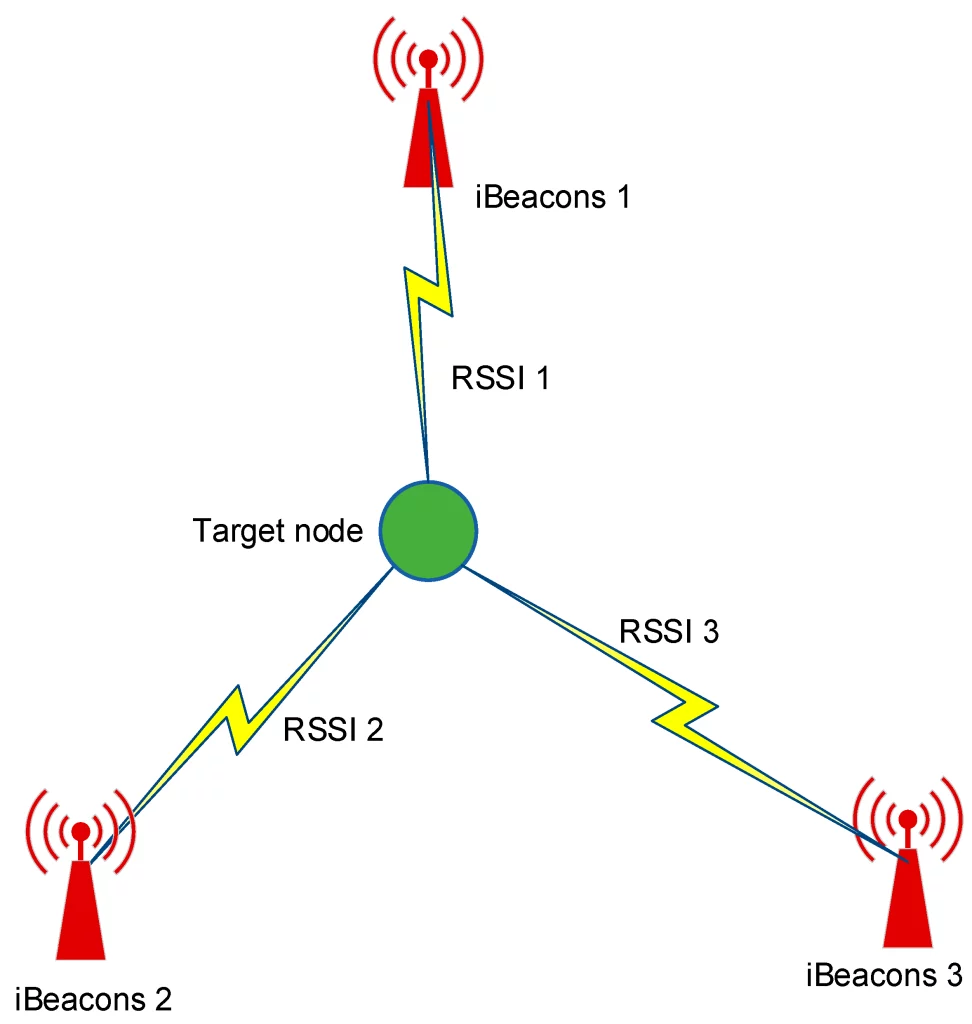

The article titled Improved RSSI Indoor Localization in IoT Systems with Machine Learning Algorithms by Ruvan Abeysekera and Ruvan Abeysekera focuses on enhancing indoor localisation in Internet of Things (IoT) systems using AI machine learning algorithms. The paper addresses the limitations of GPS in indoor environments and explores the use of Bluetooth low-energy (BLE) nodes and Received Signal Strength Indicator (RSSI) values for more accurate localisation.

GPS is ineffective indoors so the paper emphasises the need for alternative methods for indoor localisation, which is crucial for various applications like smart cities, transportation and emergency services.

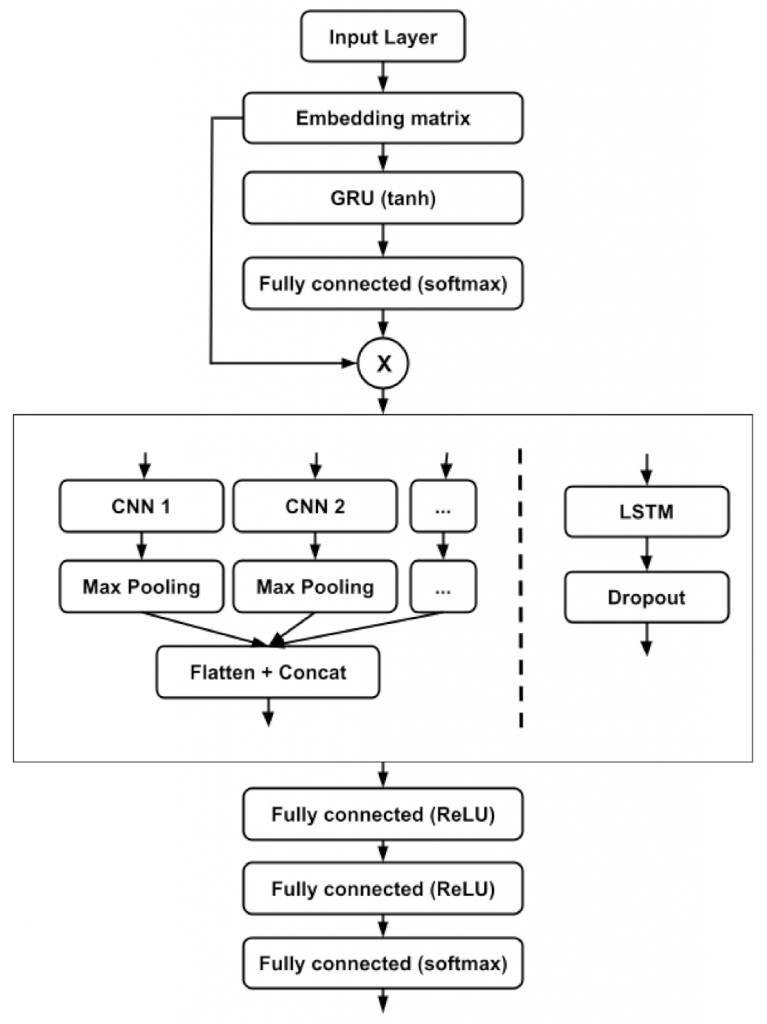

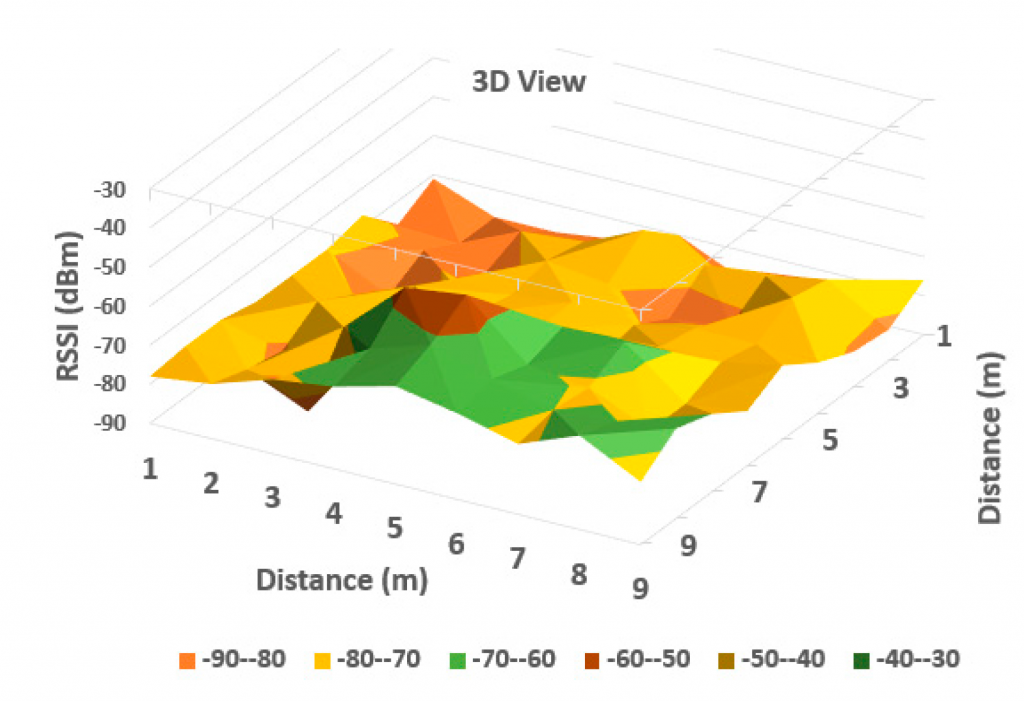

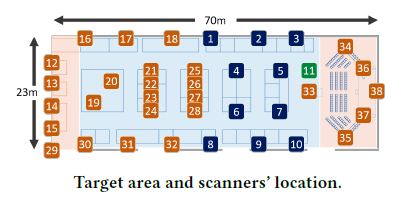

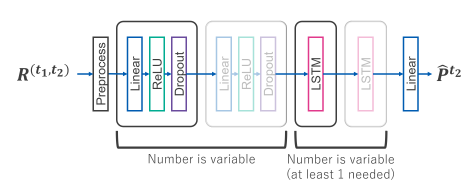

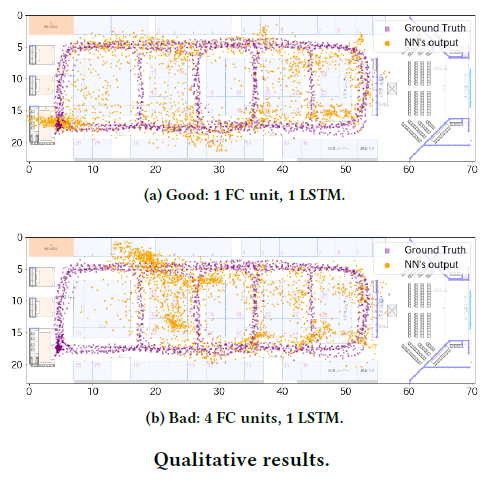

The study uses machine learning algorithms to process RSSI data collected from Bluetooth nodes in complex indoor environments. Algorithms like K-Nearest Neighbors (KNN), Support Vector Machine (SVM, and Feed Forward Neural Networks (FFNN) are used, achieving accuracies of approximately 85%, 84%, and 76% respectively.

The RSSI data is also processed using techniques like weighted least-squares method and moving average filters. The paper also discusses the importance of hyperparameter tuning in improving the performance of the machine learning models.

The research claims to provide significant advancement in indoor localisation, highlighting the potential of machine learning in overcoming the limitations of traditional GPS-based systems in indoor environments.