In our previous post on iBeacon Microlocation Accuracy we explained how distance can be inferred from the received signal strength indicator (RSSI). We also explained how techniques such as trilateration, calibration and angle of arrival (AoA) can be used to improve location accuracy.

There’s new research presented at The 17th Annual International Conference on Mobile Systems, Applications, and Services (MobiSys ’19) by researchers from Nagoya University, Japan that looks into the use of AI machine learning to process Bluetooth RSSI to obtain location.

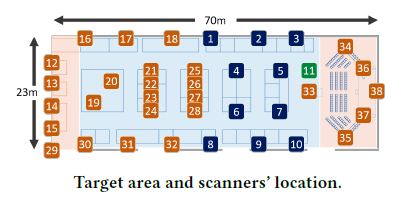

Their study was based on a large-scale exhibition where they placed scanning devices:

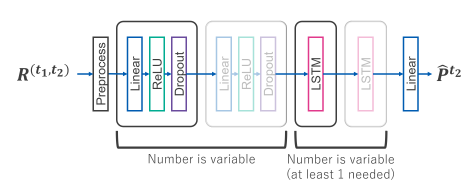

They implemented a LSTM neural network and experimented with the number of layers:

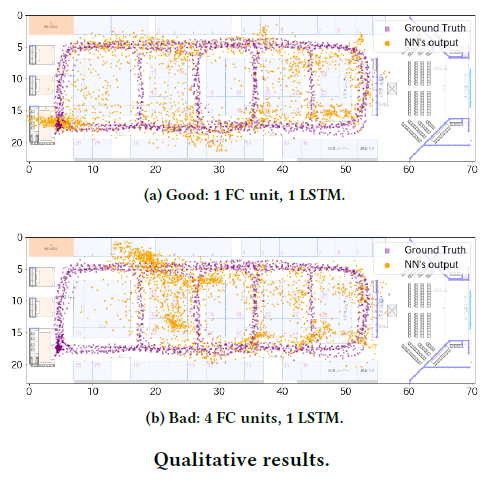

They obtained best results with the simplest machine learning model with only 1 LSTM:

As is often the case with machine learning, more complex models over-learn on the training data such that they don’t work with new, subsequent data. Simple models are more generic and work not just with the training data but with new scenarios.

The researchers managed to achieve an accuracy of 2.44m at 75 percentile – whatever that means – we guess in 75% of the cases. 2.44m is ok and compares well to accuracies of about 1.5m within a shorter range confined space and 5m at the longer distances achieved using conventional methods. As with all machine learning, further parameter tuning usually improves the accuracy further but can take along time and effort. It’s our experience that using other types of RNN in conjunction with LSTM can also improve accuracy.

If you want to view the research paper you need to download all the papers from the conference (zip) and extract p558-uranoA.pdf. Some of the other papers also make interesting, if not directly relevant, reading.

Read about AI Machine Learning with Beacons